The technological landscape of our century is marked by a stunning pace of evolution. Each new advancement opens doors to potentialities both awe-inspiring and terrifying, shaping the ways we interact with the world around us. Perhaps as potent as any nuclear physics or artificial intelligence breakthrough, the phenomenon known as ‘Deepfake’ has erupted into our lives. This article aims to unpack the technological intricacies of Deepfake, delve into its societal implications and shed light on its future prospects.

The term ‘Deepfake’ was born in 2017 (1) as the amalgamation of two concepts: ‘deep learning’ and ‘fake’. In essence, it refers to synthetic media that has been manipulated using machine learning. In its early days, Deepfake technology was mainly leveraged by digital savvy communities to produce amusing or satirical content. It involved swapping faces on a video, featuring Hollywood figures like Jim Carrey (2). This seemingly entertaining technology, however, has now evolved and broadened its ramifications.

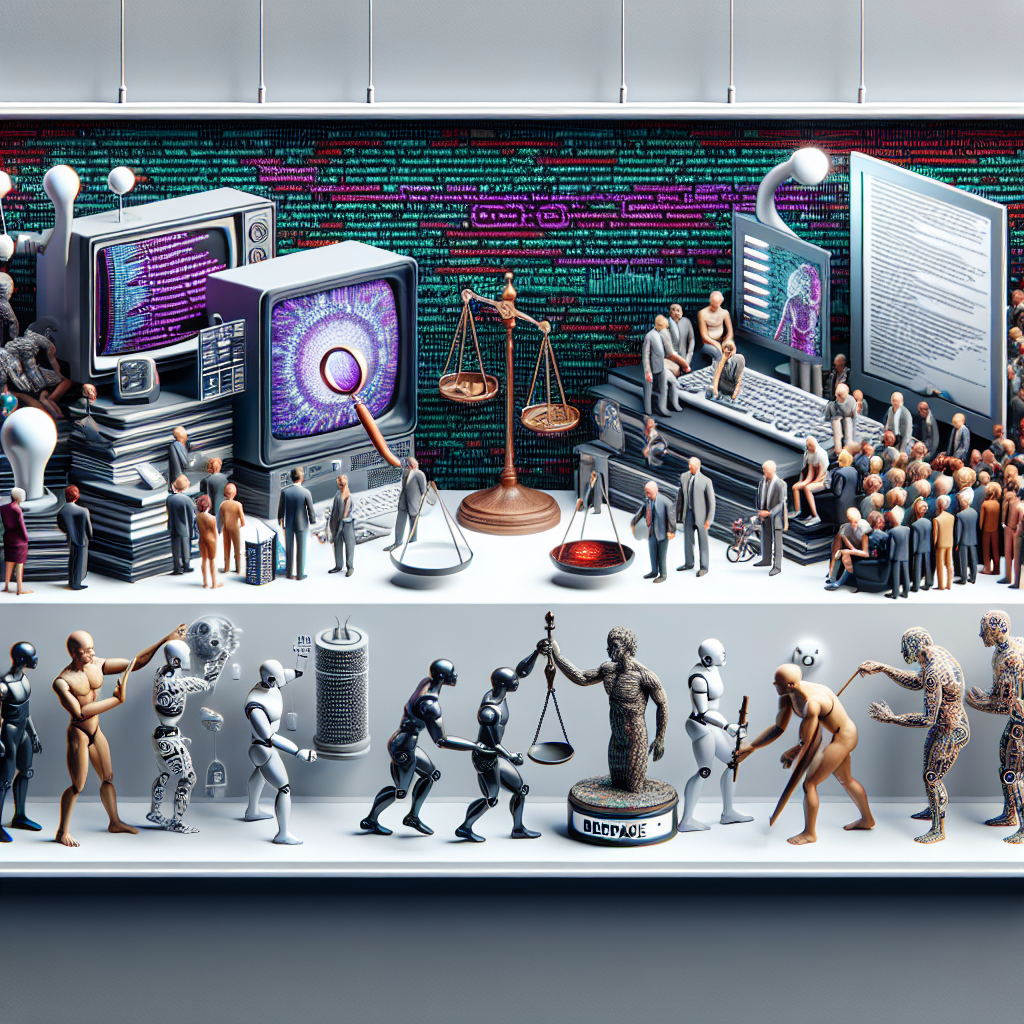

There are few distinct themes that can help in us understanding complex dimensions of Deepfake. Initially, we can start with its core technologies and methods. Then, we can explore its social impacts, like the effect on misinformation and trust erosion. Finally, we will delve into the legal and ethical implications, an arena fraught with controversy.

Deepfake thrives on a harmonious intersection of different AI sub-disciplines. Key to the process is Generative Adversarial Networks (GANs), first introduced by GoodFellow et al. (3). GANs operate on a simple yet profound principle: two AI entities, a generator and a discriminator, compete in a zero-sum game. The generator produces fake data and the discriminator discerns this data from the real one. This interplay results in increasingly compelling fakes. As technology continues to progress, so does the preciseness of these falsely generated media, making it harder for humans, and even traditional machine learning algorithms, to identify them.

The societal consequences of Deepfake are profound and wide-ranging. Misinformation is possibly the most obvious concern. Fabricated videos of political figures, for instance, can be utilised to spread harmful propaganda or influence electoral outcomes (4). In a world already reeling from ‘fake news’, Deepfake threatens to further corrode trust in media.

In the darker circuits of the web, Deepfake has been employed to facilitate non-consensual pornography, often known as ‘revenge porn’. High-profile figures, mostly women, have become targets of such atrocious use of this technology, causing psychological harm and hurting their public image (5).

The legal and ethical aspects of Deepfake continue to be a contentious gray area. Currently, few laws govern the use of Deepfake technologies explicitly (6). Moreover, matters become more complex when the technology is used for satirical or parody purposes, which may fall under protected speech rights in many countries.

Deepfake also throws into sharp relief many ethical dilemmas. The fine line between free speech and misinformation can blur dangerously in this context. Furthermore, the question of consent in the creation of synthetic media introduces another ethical dimension. How should the consent of the individuals, whose images and voices are used to train these models, be obtained and what rights do they retain over the output?

On the horizon, the prospects for Deepfake are mixed. While some predict the worst, foreseeing a world where seeing is no longer believing (7), others hold out hope. From making sophisticated VFX accessible to low-budget filmmakers to producing voiceovers in several languages, Deepfake technology has the potential to revolutionise diverse fields (8).

However, our capacity to manage Deepfake’s societal ramifications largely hinges on implementing effective legislation, raising public awareness and advancing digital forensic tools to detect fakes. The future holds high stakes in this regard, making this technology one worthy of our continued vigilance and ethical deliberation.

As we close, contemplate the question John Villasenor, a cybersecurity expert at the Brookings Institution, posed: “What happens when anyone with a computer can make it appear as if anyone said anything?” (9) In the nuances of that question lies our collective challenge – deep and unchartered, much like the technology itself.

References and Further Reading

- Franceschi-Bicchierai, L. (2018) ‘The History of ‘Deepfakes”, Vice.

- Youtube – “The Shining starring Jim Carrey : Episode 1 – Concentration [DeepFake]” – https://youtu.be/HG_NZpkttXE?feature=shared

- Goodfellow, I., et al. (2014) ‘Generative Adversarial Nets’, NIPS.

- Westerlund, M. (2019) ‘The Era of Deepfakes: The Implications for Privacy & Public Trust’, Medium.

- Hao, K. (2019) ‘Deepfake revenge porn is now illegal in Virginia’, MIT Technology Review.

- Chesney, R., Citron, D. (2018) ‘Deep Fakes: A Looming Challenge for Privacy, Democracy, AND National Security’, Texas Law Review.

- Oberhaus, D.(2020) ‘Seeing is no longer believing’, Wired.

- Vincent, J. (2019) ‘Why 2019 was the year of the deepfake’, The Verge.

- Villasenor, J. (2018) ‘Artificial intelligence, deepfakes, and the uncertain future of truth’, Brookings.

- ‘Deepfakes’, Future of Privacy Forum.

- Westerlund, M. (2019) ‘How Deepfakes Undermine Truth and Threaten Democracy’, TED.

- Chesney, R., Citron, D. (2018) ‘Deep Fakes: A Looming Crisis for National Security, Democracy and Privacy?’, Lawfare.

- Garimella, K., Tyson, G., (2020) ‘What is real? The effect of placebic and informed perspective on user judgments of blue checkmarks on twitter’, Information Processing & Management.

Leave a comment